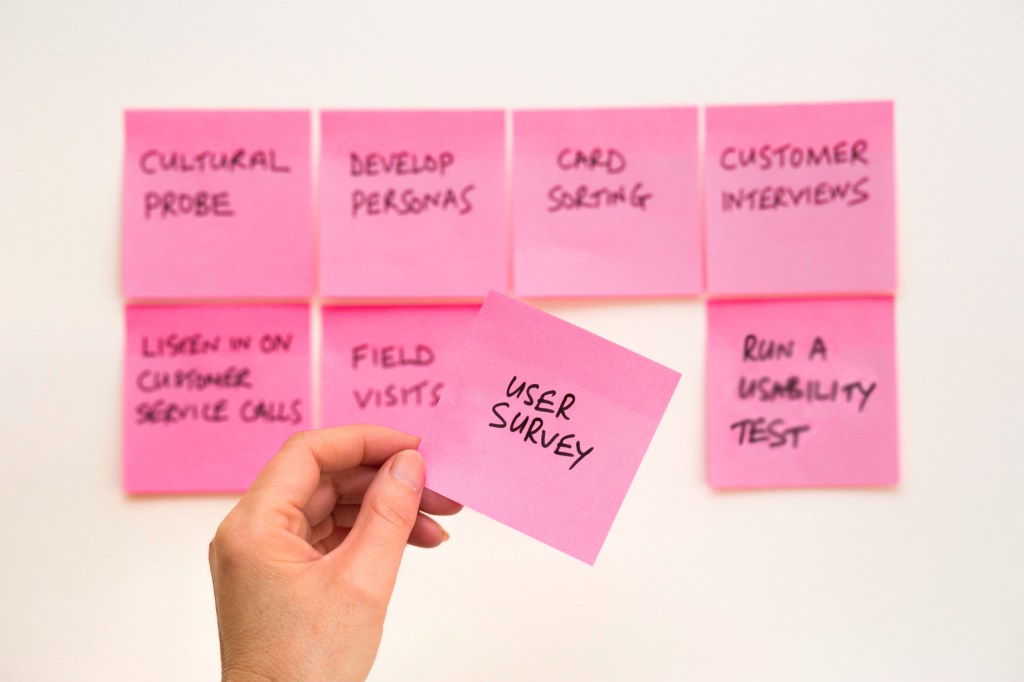

When trying to answer questions on what audiences want or need and why they behave in certain ways, it is important to supplement analytics with user research. Analytics can provide baselines, indicate possible pain points, and show you what items are most useful while talking directly to your users can provide important context for why those numbers are what they are. Surveys can be a great starting place for getting valuable information directly from your users, allowing you to easily ask a large number of people questions on your site or product.

However, if you’re going to spend the time and effort to survey people, you want to make sure the results actually answer your questions and can guide you to any changes you may need to make.

One key step is to think carefully about how you are asking the questions. Writing good survey questions takes time and effort, and there are a couple of traps you can fall into.

Writing leading questions

The first writing trap is phrasing survey questions in a way that leads respondents to give you the answer you are looking for. Our brains are very good at interpreting signals in ways that we don’t realize, and it is all too easy to corrupt your data without intending to.

Feature-focused

Leading questions take a wide variety of formats, but one of the most common formats is framing the question in a way that causes the respondent to focus on a specific item or functionality when you are trying to gauge how effective it is within the broader product.

An example of this is asking, “Is your eye drawn to the feature at the top of the page?” You’ll be able to get the data from this question in a simple “yes/no” format, which is easier to analyze, but you won’t really know if they noticed the feature because of the feature’s design or if they noticed it because your question called their attention to it.

Instead, a better way to ask the same question is, “What did you notice first when you visited the page?” This question doesn’t call attention to any specific item on the page, so respondents’ responses won’t be unduly influenced by it. You’ll also get a better idea of which other elements are catching people’s eyes and may be able to create further hypotheses around design changes that would make the page more usable.

In this example, if you have specific questions about the design of the feature, you can ask these questions later in the survey when guiding people’s attention to the feature itself won’t impact the data you are trying to gather.

Insinuating

Another type of leading question includes words that may indicate some kind of judgement or trigger some kind of emotion.

This can be easier to recognize in surveys for political organizations, where you’ll frequently see questions like “Do you agree or disagree that moral voters care about this issue?” Including the adjective “moral” in the second phrase creates a connection between morality and caring about the issue, whatever it may be, and it will slant responses. Instead, a better question would be, “How do you feel about this issue?”

With questions around digital products, these types of connections can be more subtle and created by innocent-seeming questions like “Do you prefer short content?”

This question has a couple of issues. First, it’s vague. What is “short content,” and are the respondents going to define it the same way that you do? Secondly, while “short” seems innocuous, there are a number of connotations to it, including shallow, quickly-written, and not substantive. Instead, you could ask, “What length of content do you prefer?” and include definitions that are easily understood, such as, number of lines or word counts in the multiple choice answers available.

Assumption-based

A third type of leading questions includes baked-in assumptions. “How regularly would you use this app?” assumes the respondent wants to use it at all. Similarly, “How far down this page would you scroll?” assumes that respondents will scroll, as opposed to leaving the page immediately.

The former could be reframed to a two-part question by first asking if the respondent would use the app, then for those who said yes, asking how frequently.

The latter question can be reframed in a few different ways, depending on what question you’re actually trying to answer, and may better be answered through a different type of user testing.

Takeaways:

Always review your questions for:

- What you’re drawing people’s attention to;

- Words that imply judgment; and

- Hidden assumptions in the wording.

Writing non-actionable questions

This brings us to the second category of mistakes when crafting survey questions: writing questions that provide you with information that isn’t well-defined or actionable. Before including any questions in a survey, you should ask, “What changes will we make because of this information?” or “How will this influence our decisions moving forward?”

Non-actionable questions frequently come from brainstorming sessions, and while they themselves aren’t great questions to ask in a survey, they can be great starting points to figure out what questions you should include.

Even if the example above, “How far would you scroll?” weren’t a leading question, will the answers you get be meaningful or provide information that you can act upon to improve the site? You likely don’t actually want to know how far the person would scroll; instead, you may want to know whether they find the content on the page interesting or whether they have seen a call-to-action module on the page. It is important to dig into the reason the question was proposed and then ask the specific questions that will give you the answers you need to move forward.

It’s possible that the questions you’re asking can’t be broken down into smaller, more actionable ones. Sometimes you’re looking for broad information about your audience and their needs or have bigger questions about how people navigate your site.

In that case, you have a couple of options: while you can still ask those within a survey, you should think carefully about the format and placement of those questions. The more questions you include, the more people drop out of the survey or skip questions, so you should prioritize actionable questions higher within the survey.

You should also think about other ways to get the information you’re looking for. It might be that a survey isn’t the right format to reach out to your users, and you’d be better served running user interviews. You can read more about that in our upcoming posts on user testing.

Surveys can be a powerful tool when used correctly and can help guide you in making informed decisions about your product. One last tip is to always have someone not involved in the survey review it to flag any questions that may lead respondents to answer a particular way that may be confusing for them or whose answers may not provide the guidance you’re looking for.

Takeaways:

- Create a broad list of questions you need answers for;

- Create your survey using the questions that would give you immediately actionable information;

- Have a colleague who is not involved review the survey and flag any issues for you; and

- Consider how you might use different user testing methods to answer other questions.